We clearly understand that the future is now. When a client asked us for a mix of advanced technologies and the best equipment to create an innovative approach to AR, we knew that this project was perfectly tailored to our know-how. At xBerry, we contribute to the technology transformation of our reality – here, we share how we approached this project along with our cutting-edge solutions.

Challenge

A Swedish startup was looking for a way of moving augmented reality to the next level.

The idea was complex – our client needed an almost futuristic system that enables users to interact with interfaces and objects displayed in AR by performing gestures with their hands.

At xBerry, we are always looking for the best solutions for even the most mosaic tasks, and this time the complexity of the project came with a bunch of challenges.

To design a suitable system, we needed to solve issues, ranging from real-time environment mapping to compatibility with third-party hardware and advanced hand detection systems.

Armed with all this knowledge and experience, we addressed the following problems and created the best-suited solution.

Goals

First of all, we needed to ensure that the system is capable of tracking the environment in real-time, using multiple camera mapping.

To allow third-party hardware to be compatible with our solution, we modified Linux kernel and libinput. The app needed to be compatible with all Wayland-capable Linux applications. Next, we had to combine stereoscopic and infrared-based depth vision. The stereoscopic camera extracted the object’s visual features while the infrared-based depth camera provided high resolution and precision spatial data.

To get accurate results we needed customized equipment. Cameras had to capture the whole image at once, maintaining full synchronization with external self-timers. The equipment was ordered in India.

The gesture recognition system that was available at the time of development was capable of tracking the user’s hand from a pre-specified point. We needed to find a solution to detect the user’s hand and interpret user dynamic gestures such as swipe up, down, left, right, bloom, and click.

Solution

How SLAM works?

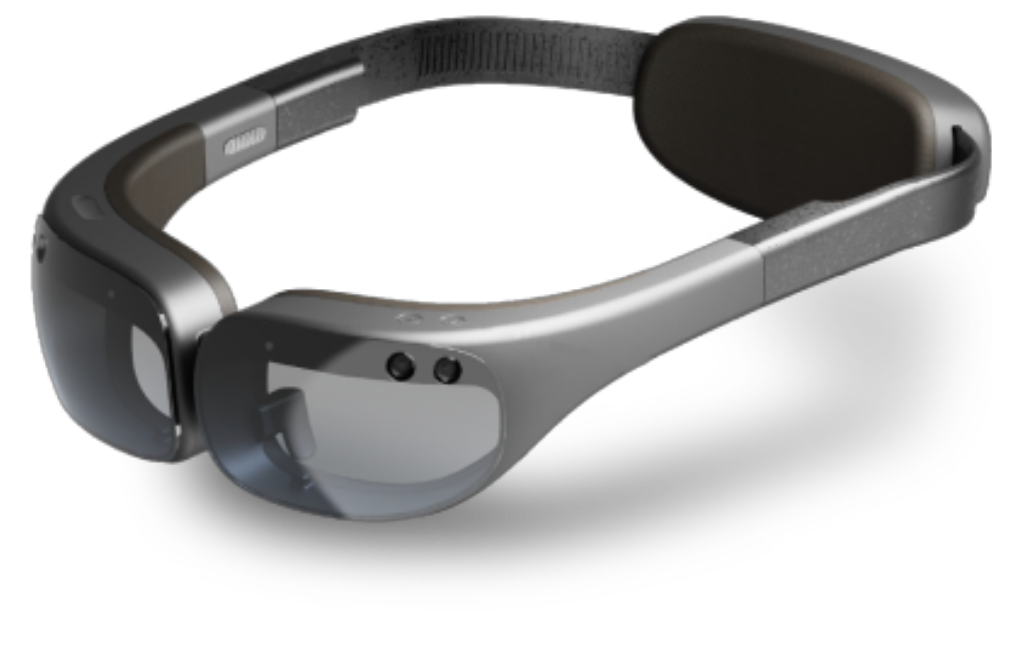

We used SLAM to track the position of the end-user in a room based on an image from a wide-angle, stereoscopic camera attached to the headset. It simultaneously creates a sparse map of points used for navigation and geometry reconstruction of the surrounding environment (i.e. shape of a window, the position of a wall, or size of a table).

Results

We designed and implemented a complex system compatible with a wide range of applications.

Not only does it recognize and process hand gestures in real-time, but it is also generic enough to be quickly adapted to almost any hardware. As such it can be used in various fields, from architecture to engineering, marketing, and more.

The Mixed Reality System is a cutting-edge solution that builds on an already futuristic functionality of AR, adding to the mix the one element that was missing: the possibility to control an AR interface and objects with a wave of the hand.

Tech Stack

What are the customers saying?

I strongly recommend xBerry for their professionalism and reliability in software development. I believe that our cooperation has been a role model for transparency, high-quality, and commitment. The project's goal was to solve a very difficult problem at the border of machine learning, augmented reality, and image processing. Our project required research, innovative technology and, excellent programming skills. I am stunned by how smoothly our project run, despite its difficulty and time constraints.